What Are Deepfakes?

Deepfakes are AI-generated videos, images, or audio that mimic the appearance and sound of a person with such precision that they can deceive both people and algorithms.

Typically created in real-time, deepfakes are most commonly used in videos and augmented reality filters. While many consumer apps leverage deepfake technology for entertainment — like FaceSwap — the increasing accessibility of this technology has led to its exploitation for malicious purposes.

How Do Deepfakes Work?

The term “deepfake” combines deep learning (a subset of machine learning) and fake, referring to media created through AI techniques.

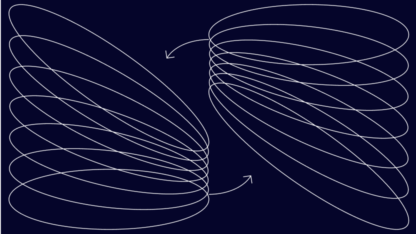

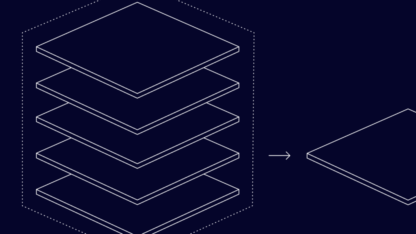

Deepfakes are produced using deep learning and generative adversarial networks (GANs). GANs operate through two neural networks:

- Generator: Creates synthetic images, audio or video.

- Discriminator: Evaluates the outputs of the generator and attempts to distinguish these from real data.

This back-and-forth process continues until the generator produces content so convincing that the discriminator can no longer reliably identify it as fake.

Initially, deepfakes required extensive training data, making celebrities popular targets. However, advancements now allow the creation of deepfakes from a single image or short audio clip.

Why Are Deepfakes Dangerous?

Not all deepfake uses are malicious — many applications serve entertainment or creative purposes. However, their misuse raises serious concerns.

Non-consensual AI-generated videos are extremely harmful and exploitative. Due to their ability to convincingly misrepresent reality, deepfakes have been used in online fraud, disinformation, hoaxes, extortion and revenge porn.

According to a 2019 research conducted by Sensity, a company that detects and monitors sexual deepfakes, 96% of deepfake videos identified online were pornographic and 90% of those featured women. Deepfakes have also been used for extortion, with perpetrators threatening to release fabricated private videos to coerce victims.

Alarmingly, children have also been targeted, with AI technology being misused to create and distribute explicit synthetic images and videos involving minors. This represents a significant expansion of harm facilitated by deepfake technology, raising serious ethical and legal concerns.

In addition to personal exploitation, deepfake technology has been increasingly deployed in political campaigns to manipulate public opinion. Examples include synthetic videos and audio clips used to create false narratives about political candidates or influence elections, often undermining the democratic process.

Deepfakes undermine trust in media and digital platforms by blurring the line between authentic and manipulated content, while also posing security risks by bypassing biometric authentication systems like facial and voice recognition used in sensitive applications such as banking and access control.

3 Examples of Deepfakes

Let’s look at three great examples of these.

- Buzzfeed’s Synthetic Obama video voiced by Jordan Peele

- GAN-generated convincing human faces on ThisPersonDoesNotExist.com

- The popular Reface face swap app

1. The Obama/Jordan Peele Deepfake video

Perhaps the most famous example of a deepfake, which largely brought the technology into the mainstream spotlight, was an April 2018 video released by Buzzfeed that was based on a synthetic Barack Obama and an authentic Jordan Peele. To date, it has amassed more than 8.7 million views on YouTube.

2. People (and Cats) Who Do Not Exist – Examples of Image Deepfakes

Our second example is the website This Person Does Not Exist, which loads a new GAN generated human face every time you refresh it.

While many of the results are very convincing, there is the occasional glitch and an overall uncanny valley effect. Nevertheless, the website is so popular that it has inspired spinoffs, including This Cat Does Not Exist.

3. An App That Swaps Your Face with a Celebrity’s

Available both on the App Store and Google Play, this mobile app by Neocortext, Inc boasts 4.8/5 stars on 4.5/5 stars respectively on the two application marketplaces.

Reface allows users to deepfake themselves, swapping their faces with those on celebrity and meme videos, gifs and images. Its library is largely crowdsourced.

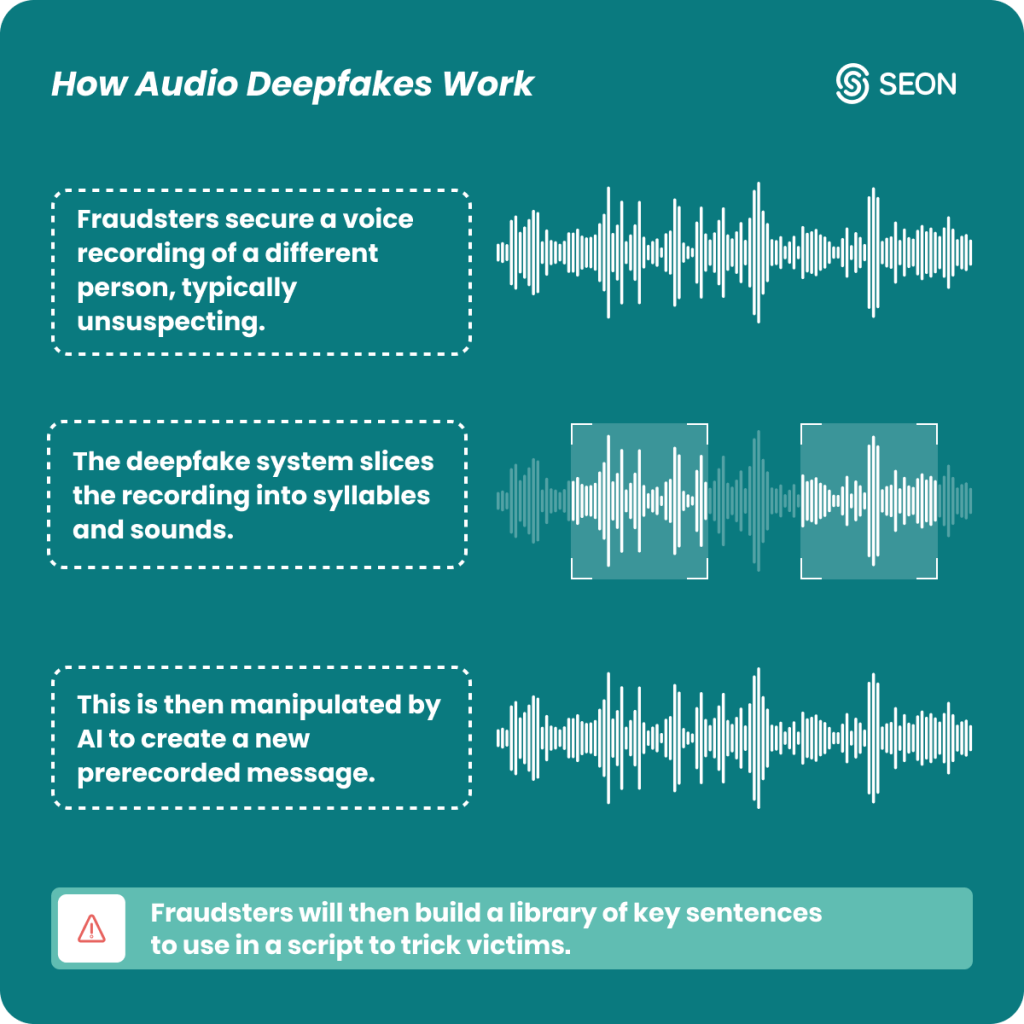

How Does Deepfake Fraud Work?

Deepfake fraud typically relies on technology that allows the fraudster to impersonate another person, typically someone the victim knows. This could be a manager, their company’s CEO or a distant relative — a trusted figure they don’t want to disappoint or disobey, yet someone they do not interact with frequently enough to spot the subtle differences in their mannerisms.

Using the voice and face of a trusted person, the fraudster will instruct the victim to transfer money, share sensitive data or grant unauthorized access.

In fact, this type of fraud is already counting several prominent examples. For instance, the case of the Managing Director who was convinced by a deepfake voice created using voice cloning to transfer $240,000 to a supplier he was not familiar with, in a different country.

How to Spot Deepfake Technology

A deepfake often evokes an uncanny feeling, revealing subtle imperfections that betray its authenticity. By training the human eye to spot these inconsistencies—such as unnatural facial movements, irregular lighting, or mismatched audio and visual cues—and combining this skill with reliable verification techniques, it becomes possible to distinguish deepfakes from genuine content.

Telltale signs that can hint at manipulation:

- Inconsistent lip color with the face.

- Skin inconsistencies (too smooth, too wrinkled, or age mismatch with hair).

- Shadow anomalies around the eyes.

- Glare issues on glasses.

- Unnatural facial hair or moles.

- Blinking patterns (too much or too little).

- Odd lip movement or unrealistic mouth area.

Learn how to spot deepfakes with pre-KYC risk signals, device and IP intelligence, and risk-based IDV to block deepfake fraud early.

Read our thought leadership

How Is Deepfake Fraud Impacting Businesses?

With deepfake technology becoming more accessible, fraudsters are now able to impersonate executives, employees, or clients with alarming precision, creating in significant business vulnerabilities. Research by Regula highlights the financial toll, reporting average losses of $450,000 per company across various sectors.

But beyond direct financial losses, the reputational fallout is equally damaging, as manipulated media can tarnish a company’s image. Even if the scam is debunked by the company, the damage is already done. Industries reliant on trust, like finance, law, and healthcare, are particularly susceptible.

As the sophistication of these scams increases, so do the costs for businesses. Companies are now forced to allocate significant resources to cybersecurity measures, AI-driven fraud detection tools and employee training programs to mitigate this escalating risk.

Deepfakes are essentially impersonations, so they are a popular method of CEO fraud using synthetic voices.

Consumers and employees may be impacted by phishing attempts using deepfakes, and they can be victims of identity theft through it, as a fraudster can try to use deepfake technology to beat processes for KYC onboarding that are based on face matching and other biometric verifications.

We should also note that criminals and fraudsters keep coming up with new ways to use deepfakes, including extortion, blackmail and industrial espionage. Companies, organizations and private individuals ought to remain vigilant.

Sources

- Arxiv: Am I a Real or Fake Celebrity?

- Arvix: Generative Adversarial Networks

- Deepfakesweb: Online Deepfake Maker

- This Person Doesn’t Exist: Home Page

- EU Observer: ‘Deepfakes’ – a political problem already hitting the EU

- Regmedia: The State of Deepfakes

- This Cat Doesn’t Exist: Home page